AI vs GPs: Can ChatGPT accurately diagnose these common medical conditions?

As we witness AI's ever-increasing influence in job sectors worldwide, including healthcare, how close are we to AI potentially replacing our GPs with AI?

According to the World Economic Forum, AI is predicted to replace 85 million jobs across the world by 2025. Recently, AI has been integrated into many jobs across the globe, from marketing to healthcare - it’s a hot topic.

The world is rightfully very excited by a recent study that found that AI is nearly twice as accurate at grading the aggressiveness of a rare form of cancer from scans as the current method.

Could this method of diagnosis be applied to other medical conditions?

Here at Evoluted, we’ve run our own experiment to test out the capabilities of ChatGPT as a GP. Our goal was to determine whether AI can accurately diagnose common medical problems.

Can AI correctly diagnose these medical conditions?

We asked DALL.E to generate us an AI GP. Allow us to introduce "Doctor A.I Chat," our AI-powered virtual doctor:

Created with DALL·E

Our study focused on evaluating whether ChatGPT, or Doctor A.I Chat, could effectively diagnose medical conditions based on the symptoms listed on the NHS website.

To ensure a comprehensive assessment, we selected a random sample of 40 medical conditions from the NHS site that patients would typically seek a GP's diagnosis for.

We input the NHS list of symptoms per condition into ChatGPT and asked Doctor A.I Chat to provide a diagnosis based on the symptoms and information we gave her.

If the AI suggested the correct medical condition in its response, we marked it as a correct diagnosis. If the correct diagnosis was absent from its suggestions, we marked it as an incorrect diagnosis.

It's important to note that we provided no additional context to the AI. We used the following prompt alongside the list of symptoms:

“Please diagnose this medical condition for me”

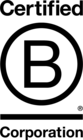

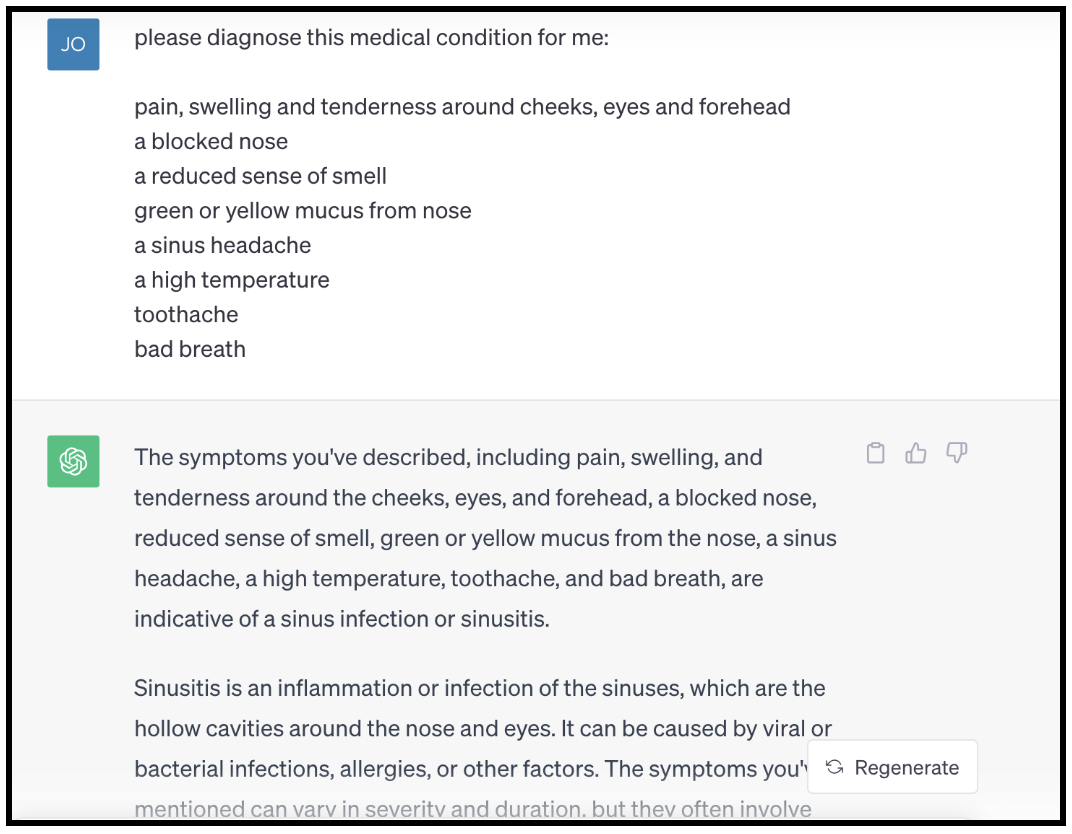

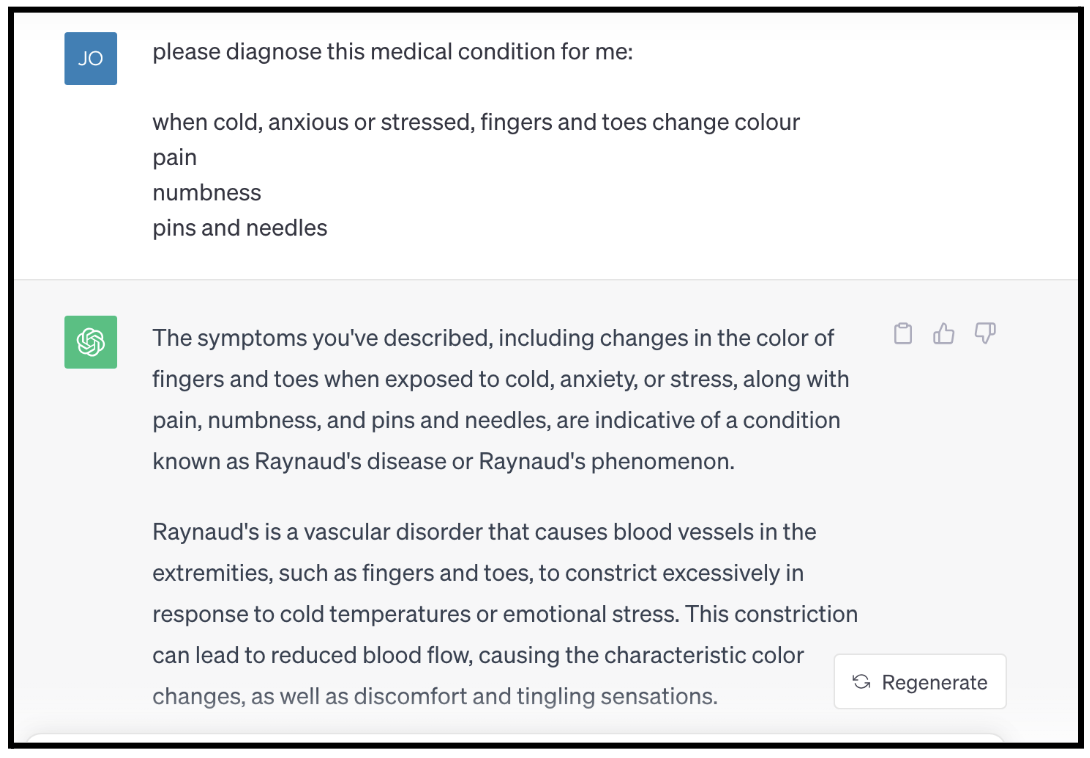

Here are a few examples of the process and the results:

Correct diagnoses

Sinusitis

Raynaud’s

Anaemia

Incorrect diagnoses

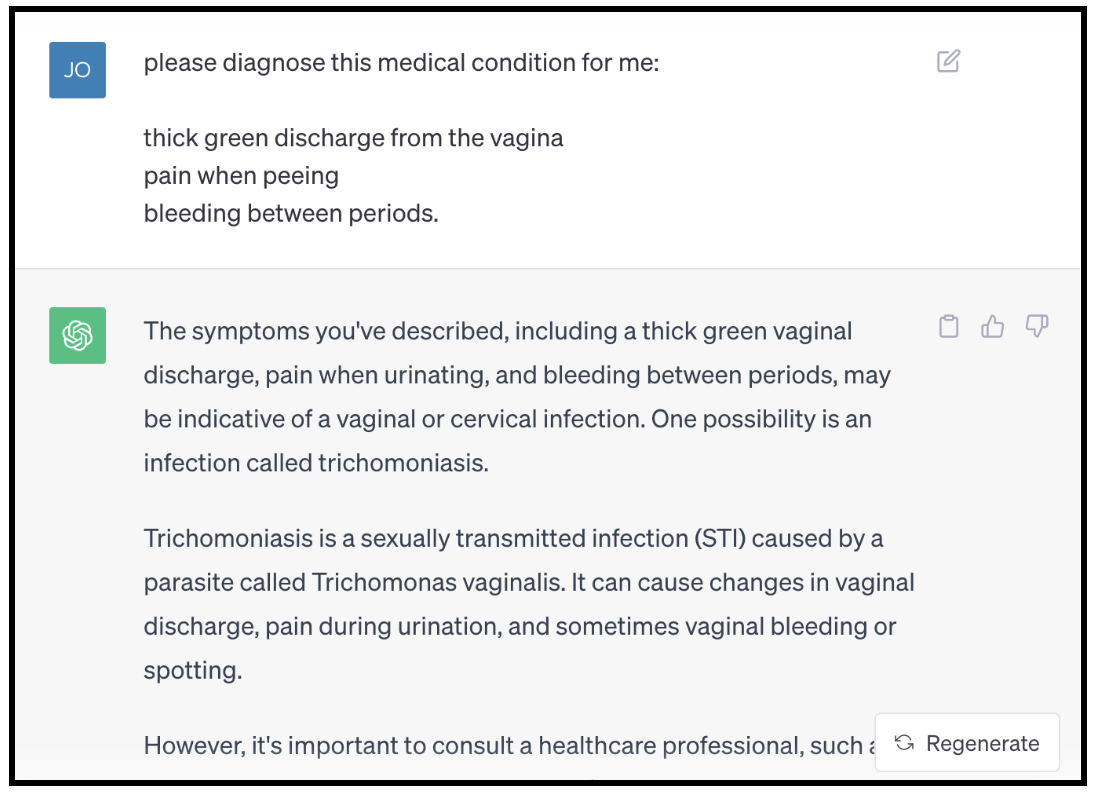

Gonorrhoea

Even with an ‘incorrect’ diagnosis after we input the symptoms of gonorrhoea, ChatGPT did suggest a diagnosis that had very similar symptoms on the NHS site - trichomoniasis.

According to the NHS, trichomoniasis in women can cause similar symptoms:

Abnormal vaginal discharge that may be thick, thin or frothy and yellow-green in colour

Producing more discharge than normal, which may also have an unpleasant fishy smell

Soreness, swelling and itching around the vagina – sometimes the inner thighs also become itchy

Pain or discomfort when passing urine or having sex

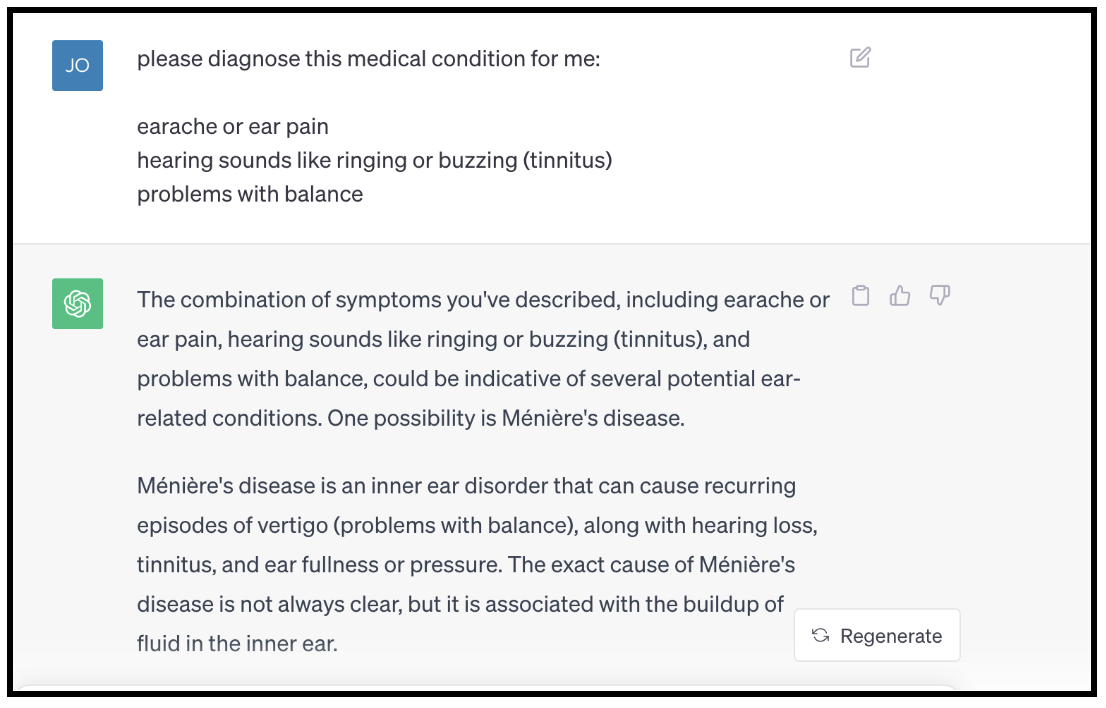

Glue ear

Similarly, for Glue Ear, ChatGPT ‘incorrectly’ diagnosed Ménière's disease instead, but the symptoms are also similar to Glue Ear.

According to the NHS, symptoms of Ménière's disease include:

Feeling like you or everything around you is spinning (vertigo)

Losing your balance

Ringing or buzzing sounds in 1 or both ears (tinnitus)

Hearing loss

Feeling pressure, discomfort or pain deep inside your ear

Feeling or being sick

AI can accurately diagnose 90% of medical conditions from just the symptoms

Let’s see how Doctor A.I Chat got on overall. Here are the full list of results results:

| Medical condition | Results of AI diagnosis |

| Athlete's Foot | Correct |

| Bacterial vaginosis | Correct |

| Baker's cyst | Correct |

| Bell's Palsy | Correct |

| Bladder stones | Incorrect |

| Bronchiolitis | Correct |

| Cellulitis | Correct |

| Chest infection | Correct |

| Conjunctivitis | Correct |

| Croup | Correct |

| Ear infection | Correct |

| Fibroids | Incorrect |

| Flu | Correct |

| Genital herpes | Correct |

| Glue ear | Incorrect |

| Gonorrhoea | Incorrect |

| Hives | Correct |

| Huntington's Disease | Correct |

| Inflammatory Bowel Disease | Correct |

| Iron deficiency anaemia | Correct |

| Lyme disease | Correct |

| Mastitis | Correct |

| Meningitis | Correct |

| Oral thrush | Correct |

| Piles (haemorrhoids) | Correct |

| Raynaud's | Correct |

| Reflux | Correct |

| Sciatica | Correct |

| Shin splints | Correct |

| Shingles | Correct |

| Sinusitis | Correct |

| Sleep apnoea | Correct |

| Stomach ulcer (peptic ulcer) | Correct |

| Stye | Correct |

| Syphilis | Correct |

| Tinnitus | Correct |

| Tonsillitis | Correct |

| Ulcerative colitis | Correct |

| Underactive thyroid (hypothyroidism) | Correct |

| Whooping cough | Correct |

AI exhibits an impressive capability to accurately diagnose 90% of common medical conditions based solely on symptoms. After inputting the symptoms of 40 medical conditions (from the NHS site), ChatGPT gave 36 accurate diagnoses out of 40, without even seeing a patient in person.

AI has the potential to swiftly and accurately diagnose illnesses within seconds, offering an accuracy rate of 90% right now. With the prospect of further technological advancements, it raises the question of whether AI could also venture into prescribing treatments with precise dosages? Such a development could revolutionise GP surgeries, addressing the issue of extensive waiting times and the frequent unavailability of face-to-face appointments.

Is AI ready to become a doctor?

Despite these promising possibilities, it is important to acknowledge the limitations of AI in the medical realm. AI lacks nuance, a patient’s personal medical history, and it obviously can’t conduct physical examinations right now. Plus, most importantly, trust with the public will need to be established, and is a significant hurdle to overcome.

However, even within these constraints, AI has the potential to substantially reduce the time doctors spend on diagnoses, assist in improving the accuracy of diagnoses made by human professionals, and enhance overall patient care.

This is just the beginning of AI's integration into the medical field. With our experiment suggesting an accuracy rate of 90% in diagnosing common medical conditions, this highlights its potential.

Could the initial step be medical professionals harnessing AI for faster patient care?

Methodology

We selected a random sample of 40 medical conditions from the NHS site that patients would typically seek a GP's diagnosis for initially. We input the exact symptoms listed on the NHS website for each condition and asked ChatGPT to provide a diagnosis. If ChatGPT suggested the correct medical condition in its response, we marked it as a correct diagnosis. If the correct diagnosis was absent from its suggestions, we marked it as an incorrect diagnosis.